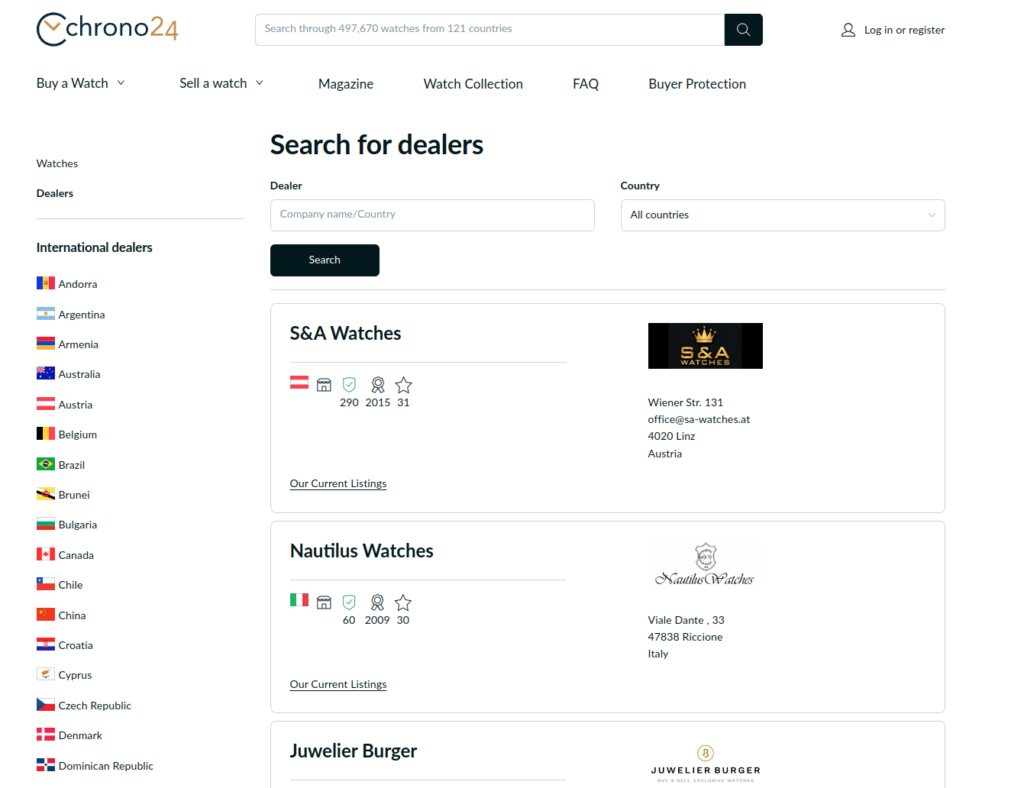

I need to extract information from this website https://www.chrono24.com/search/haendler.htm. Inside we can find watch companies. We are asked to extract the email of those companies that have a physical store and not only online presence. But we have a big problem. This website does not provide us with the email or web address of these companies.

So to solve this problem I will follow the following steps:

-

Extract the name of the watch companies from the above mentioned page.

-

Create a spider that will crawl google search for the companies and provide us with the web addresses of these companies whenever possible.

-

Create another spider that enters these addresses and extracts the emails it finds.

-

Manually review the results obtained and complete the few missing ones.

To do all this we will use the scraping and crawling framework Scrapy and Splash. Splash is a lightweight web browser that is capable of processing multiple pages in parallel, executing custom JavaScript in the page context, and much more.

1 – Extract info from web

– First we need to import libraries:

import scrapy import urllib import json from scrapy.selector import Selector from os import remove, path

-Creating a class with diferents methods inside like this:

class RelojesSpider(scrapy.Spider):

name = 'relojes'

base_url = 'https://www.chrono24.com/search/haendler.htm?'

# remove file datos.json if exist

if path.exists('data_web.json'):

remove('data_web.json')

params={

'showpage':'',

}

features = [] # create data_web.json file

def start_requests(self):

for i in range(1,111):

self.params['showpage'] = i

next_page = self.base_url + urllib.parse.urlencode(self.params)

print("AAAAA: ",next_page)

yield scrapy.Request(next_page, callback = self.parse)

def parse(self, response):

for card in response.css('.border-radius-large'):

company = card.css('span a::text').get()

country = card.css('.inline-block::text').get()

if card.css('.i-retail-store'): #Choose with presence stores

items = {

'company' : company,

'country' : country

}

yield items #salida para el archivo creado con Scrapy FEED(en settings)

self.features.append(items) #creating data_web.json file

with open('data_web.json', 'w+') as json_file:

json_file.write(json.dumps(self.features, indent=2) +'\n')

-I get all the names of the stores with physical presence and their country.:

[

{

"company": "S&A Watches",

"country": "Austria"

},

{

"company": "Nautilus Watches",

"country": "Italy"

},

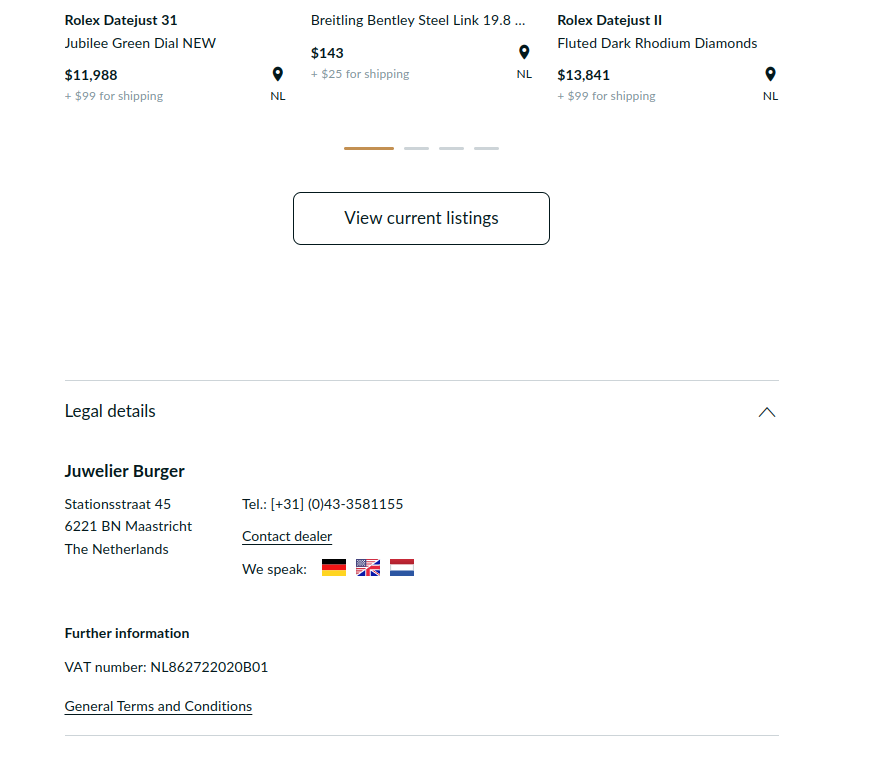

{

"company": "Juwelier Burger",

"country": "The Netherlands"

},

{

"company": "World Of Watches ATMOS Roma s.r.l",

"country": "Italy"

},

.........................................................

.........................................................

.........................................................

{

"company": "ZERO Co.,Ltd.",

"country": "Japan"

},

{

"company": "Zetton",

"country": "Japan"

},

{

"company": "\u304f\u3058\u3089time",

"country": "Japan"

}

]

2 – Crawl google search with this directions

– First we need to import libraries:

import requests import urllib from requests_html import HTMLSession import json from os import remove, path

def get_source(url):

try:

session = HTMLSession()

response = session.get(url)

return response

except requests.exceptions.RequestException as e:

print(e)

def get_results(query):

query = urllib.parse.quote_plus(query)

response = get_source("https://www.google.es/search?q=" + query)

return response

def parse_results(response):

css_identifier_result = ".MjjYud"

css_identifier_title = "h3"

css_identifier_link = ".yuRUbf a"

css_identifier_text = ".VwiC3b"

results = response.html.find(css_identifier_result)

output = []

#We dont want search this domains

not_domains = (

'https://www.google.',

'https://google.',

'https://webcache.googleusercontent.',

'http://webcache.googleusercontent.',

'https://policies.google.',

'https://support.google.',

'https://maps.google.',

'https://www.chrono24.',

'https://www.instagram.',

'https://www.youtube.',

'https://business.google.',

'https://www.facebook.',

'https://translate.google.',

'https://m.facebook.',

'https://timepeaks',

'https://www.yelp',

)

for result in results:

try:

link = result.find(css_identifier_link, first=True).attrs['href']

if link.startswith(not_domains):

pass

else:

item = {

#'title': result.find(css_identifier_title, first=True).text,

#'link': link.text,

'link': link,

#'text': result.find(css_identifier_text, first=True).text

}

output.append(item)

break #show only the first link

except:

pass

return output

def google_search(query):

response = get_results(query)

return parse_results(response)

#remove file keyWords_liks.json if exist

if path.exists('keyWords_liks.json'):

remove('keyWords_liks.json')

#1 - Opening json file with the key words scraping and put in a file

content= ''

with open("data_web.json", 'r') as f:

content = json.load(f)

#2 - we go through the json and get 'company' and search for google with google_search() that we created above.

#3 - we save the results of the addresses in keyWords_liks.json

features =[]

for i,cont in enumerate(content):

words = content[i]['company']

words_key = words + ' watches' #our key word(if its necessary)

results = google_search(words_key)

print("CONTENT: ",json.dumps(content[i], indent=2))

print(results)

print("\n")

for elem in results:

for k,v in elem.items():

items = {

'key_words': words,

'link' : v

}

features.append(items)

with open('keyWords_liks.json', 'w') as json_file:

json_file.write(json.dumps(features, indent=2) + '\n')

-I get all the names of the stores(key_words) with physical presence their possible link:

[

{

"key_words": "S&A Watches",

"link": "https://sell.sa-watches.at/"

},

{

"key_words": "Nautilus Watches",

"link": "https://www.patek.com/en/collection/nautilus"

},

{

"key_words": "Juwelier Burger",

"link": "https://www.juwelierburger.com/DE/index"

},

{

"key_words": "World Of Watches ATMOS Roma s.r.l",

"link": "https://watchesinrome.it/"

},

.........................................................

.........................................................

.........................................................

},

{

"key_words": "ZERO Co.,Ltd.",

"link": "https://zerowest.watch/"

},

{

"key_words": "Zetton",

"link": "https://www.ebay.com/str/zettonjapan"

},

{

"key_words": "\u304f\u3058\u3089time",

"link": "https://kujiratime.com/"

}

]

3 – Spider to extract emails

– First we need to import libraries:

import scrapy import json # for regular expression import re # for selenium request from scrapy_selenium import SeleniumRequest from os import remove, path # for link extraction from scrapy.linkextractors.lxmlhtml import LxmlLinkExtractor from parsel import Selector from scrapy_splash import SplashRequest import pandas as pd

class EmailspiderSpider2(scrapy.Spider):

# name of spider

name = 'emailspider2'

features = []

# remove file datos.json if exist

if path.exists('allData.json'):

remove('allData.json')

# remove file datos.json if exist

if path.exists('good.json'):

remove('good.json')

if path.exists('fail.json'):

remove('fail.json')

with open("data_words_search.json", 'r') as f:

contenta = json.load(f)

def start_requests(self):

for i, cont in enumerate(self.contenta):

company = self.contenta[i]['key_words']

link = self.contenta[i]['link']

yield SplashRequest(

url=link,

callback=self.parse,

dont_filter=True,

meta={'company' : company},

#args={'wait': 15},

)

def parse(self, response):

company = response.meta.get('company')

links = LxmlLinkExtractor(allow=()).extract_links(response)

Finallinks = [str(link.url) for link in links]

links = []

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'service' in link or 'team' in link or 'Contacts' in link or 'About' in link or 'about' in link or 'CONTACT' in link or 'ABOUT' in link):

links.append(link)

links.append(str(response.url))

l = links[0] añadida)

links.pop(0)

# meta helps us to transfer links list from parse to parse_link

yield SplashRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links, 'company' : company},

#args={'wait': 1},

)

def parse_link(self, response):

company = response.meta.get('company')

links = response.meta['links']

flag = 0

print("LINKS_2: ", links)

# links that contains following bad words are discarded

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki', 'linkedin']

for word in bad_words:

# if any bad word is found in the current page url flag is assigned to 1

if word in str(response.url):

flag = 1

break

# if flag is 1 then no need to get email from that url/page

if (flag != 1):

html_text = str(response.text)

# regular expression used for search emails

email_list = re.findall('[\w\.-]+@[\w\.-]+', html_text)

# set of email_list to get unique

email_list = set(email_list)

uniqueemail = set() #creamos lista de emails unicos con set

if (len(email_list) != 0):

for i in email_list:

# adding email to final uniqueemail

uniqueemail.add(i)

# parse_link function is called till

# if condition satisfy

# else move to parsed function

if (len(links) > 0):

l = links[0]

links.pop(0)

yield SplashRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links, 'company' : company},

#args={'wait': 2},

)

else:

yield SplashRequest(

url=response.url,

callback=self.parsed,

dont_filter=True,

meta={'company': company, 'uniqueemail' : uniqueemail},

#meta={'links': links, 'company': company},

# args={'wait': 2},

)

def parsed(self, response):

# emails list of uniqueemail set

uniqueemail = response.meta.get('uniqueemail')

company = response.meta.get('company')

emails = list(uniqueemail)

finalemail = []

for email in emails:

with open("extensionesweb.txt") as f:

[finalemail.append(email) for line in f if email.split('.')[-1] in line]

# Que nos muestre la web original(no de donde ha salido)

items = {

'company' : company,

'web': response.url.split('/')[2],

'email': finalemail

}

uniqueemail.clear() #reseteamos el contenido de uniqueemail

self.features.append(items)

with open('allData.json', 'w') as json_file:

json_file.write(json.dumps(self.features, indent=2) + '\n')

with open('allData.json') as f:

datos = json.load(f)

#usando pandas agrupamos por empresa

df = pd.DataFrame(datos).groupby(['company'])\

.agg({'web':lambda x: list(set(x)), 'email':lambda x: list(x)})\

.reset_index()\

.to_dict('r')

#si queremos que se vean todos los enlaces, quitamos el set() de 'web'

#y al definir items{} elegimos la que no corte la web y se quede con la primera parte

#agrupamos los emails y separamos los que tienen en una lista(data)

# y los que no en otra (fail)

data = []

fail = []

for i in df:

h = i['email']

n = []

for j in range(len(h)):

n.extend(h[j])

o = list(set(n))

i['email'] = o

i['web'] = ''.join(i['web']) #quitamos el modo 'lista' [www.balbalbla.com]

if i['email'] == []:

fail.append(i)

else:

data.append(i)

# if items['email'] == []:

# self.fail.append(items)

with open('fail.json', 'w') as json_file:

json_file.write(json.dumps(fail, indent=2) + '\n')

with open('good.json', 'w') as json_file:

json_file.write(json.dumps(data, indent=2) + '\n')

Now we have two files, one with those who have managed to extract some email (good.json) and one with those who have not (fail.json).:

good.json:

[

{

"company": "Jopsons Jewellers",

"web": "jopsonsjewellers.co.uk",

"email": [

"[email protected]"

]

},

{

"company": "Joyer\u00eda Libremol",

"web": "www.joyerialibremol.com",

"email": [

"[email protected]"

]

},

{

"company": "Juwelier Bektas e.K.",

"web": "juwelier-bektas.de",

"email": [

"[email protected]"

]

},

.........................................................

.........................................................

.........................................................

{

"company": "luxurywatches",

"web": "luxurywatchesnewyork.com",

"email": [

"[email protected]",

"[email protected]"

]

},

{

"company": "van Houten Uhren - Gregory van Houten",

"web": "www.vanhouten-uhren.de",

"email": [

"[email protected]"

]

}

]

fail.json:

[

{

"company": "CJ Charles Jewelers",

"web": "www.cjcharles.com",

"email": []

},

{

"company": "CORELLO",

"web": "corello.es",

"email": []

},

{

"company": "CRONOM\u00c9TRICA",

"web": "www.linguee.de",

"email": []

},

{

"company": "Chronoshop s.r.o.",

"web": "www.seikowatches.com",

"email": []

},

.........................................................

.........................................................

.........................................................

{

"company": "Luxusboerse Zurich Exclusive",

"web": "www.luxusboerse.ch",

"email": []

},

{

"company": "M.P Preziosi",

"web": "www.mpreziosi.it",

"email": []

},

{

"company": "Mika\u00ebl Dan",

"web": "www.mikaeldan.com",

"email": []

}

]

4 – Review the results and complete missing emails

– We finally have all emails for these type of stores

[

{

"company":"Beverly Hills Watch Company",

"web":"www.beverlyhillswatch.com",

"email":[

"[email protected]"

]

},

{

"company":"COMMIT",

"web":"www.commit-watch.co.jp",

"email":[

"[email protected]"

]

},

{

"company":"CORTI GIOIELLI",

"web":"corti-gioielli.it",

"email":[

"[email protected]"

]

},

.........................................................

.........................................................

.........................................................

{

"company":"Almar",

"web":"www.almarwatches.com",

"email":[

"[email protected]"

]

},

{

"company":"Alward Luxury Watches",

"web":"luxurywatches.ie",

"email":[

"[email protected]"

]

},

{

"company":"Antike Uhren Eder",

"web":"www.uhreneder.de",

"email":[

"[email protected]"

]

}

]